As a curious technologist and systems scholar, I was intrigued by the recent article published by WILL KNIGHT and PARESH DAVE titled “In Sudden Alarm, Tech Doyens Call for a Pause on ChatGPT.” The article discussed the concerns of tech luminaries, renowned scientists, and even Elon Musk himself about the potential dangers of the development and deployment of increasingly powerful AI systems, like ChatGPT.

The article starts by highlighting the recent progress made in the field of AI, including the development of powerful language models like ChatGPT. These systems have shown remarkable capabilities in tasks like language generation, translation, and even answering questions. However, the article also notes that there is growing concern among some experts that the rapid advancement of AI technology is outpacing our ability to understand and control it.

The article goes on to discuss a letter signed by over 200 AI researchers and experts, including some of the most well-known names in the field. In the letter, the signatories call for a pause on the development and deployment of powerful AI systems like ChatGPT, citing concerns about the potential risks and dangers associated with such systems.

The letter acknowledges the potential benefits of AI, but argues that the current pace of development and deployment is creating an “out-of-control race” that could have serious consequences for humanity. The signatories call for a more deliberate and cautious approach to AI development, with a focus on safety and ethics.

As a technologist and systems scholar, I find these concerns to be both valid and intriguing. It is clear that AI has the potential to revolutionize many aspects of our lives, from healthcare to transportation to entertainment. However, it is also clear that there are potential risks and dangers associated with the development and deployment of increasingly powerful AI systems.

One of the key challenges in addressing these concerns is that the development of AI is driven largely by market forces and competition. Companies and researchers are often motivated by the desire to be the first to develop a new technology or achieve a new milestone, rather than by a concern for safety or ethics. This creates a situation where the potential risks of AI are not always given the attention they deserve.

Another challenge is that the risks associated with AI are often difficult to predict or quantify. We can imagine scenarios where AI systems go rogue or are used for malicious purposes, but it is difficult to say with certainty how likely these scenarios are or what the specific risks might be.

Despite these challenges, I believe that it is important to take the concerns raised by the letter seriously. The potential benefits of AI are clear, but we must also be mindful of the potential risks and dangers. A more deliberate and cautious approach to AI development, with a focus on safety and ethics, is necessary to ensure that we are able to reap the benefits of AI without putting ourselves in danger.

Overall, the article “In Sudden Alarm, Tech Doyens Call for a Pause on ChatGPT” raises important questions and concerns about the development and deployment of powerful AI systems like ChatGPT. As a curious technologist and systems scholar, I find these concerns to be both valid and intriguing. While the potential benefits of AI are clear, we must also be mindful of the potential risks and dangers. A more deliberate and cautious approach to AI development, with a focus on safety and ethics, is necessary to ensure that we are able to reap the benefits of AI without putting ourselves in danger.

Harmful if misunderstood

While technologies like ChatGPT have many potential benefits, it is also important to consider the potential risks associated with their development and deployment. One major risk is that these technologies can be harmful if they are misunderstood or misused.

One potential harm of ChatGPT is the spread of misinformation or propaganda. ChatGPT and similar technologies are capable of generating text that can be difficult to distinguish from human-written content. If these technologies are used to spread false or misleading information, it could have serious consequences, particularly in areas like politics, where misinformation can have a significant impact on public opinion and decision-making.

Another potential harm of ChatGPT is the reinforcement of existing biases and stereotypes. These technologies are trained on large datasets, which can contain biased or stereotypical content. If the biases present in these datasets are not addressed, it can result in AI systems that perpetuate and even amplify these biases. This can have serious consequences for marginalized groups, who may be disproportionately affected by biased AI systems.

A related harm is the potential for discrimination. AI systems like ChatGPT can be used to make decisions that affect people’s lives, such as hiring decisions or loan approvals. If these systems are biased, it can result in discrimination against certain groups of people. This is particularly concerning given the lack of transparency in many AI systems, which can make it difficult to identify and address biases.

Finally, there is the risk of unintended consequences. AI systems like ChatGPT are incredibly complex and can be difficult to understand or predict. If these systems are deployed without proper testing or oversight, it can result in unintended consequences that may have serious consequences. For example, an AI system that is designed to optimize for efficiency may end up creating unintended negative impacts on society or the environment.

In conclusion, while technologies like ChatGPT have many potential benefits, it is important to consider the potential harms that may arise from their development and deployment. These technologies can be harmful if they are misunderstood or misused, particularly in areas like misinformation, bias and discrimination, and unintended consequences. It is important to approach AI development and deployment with caution and to prioritize safety and ethics in order to mitigate these potential harms.

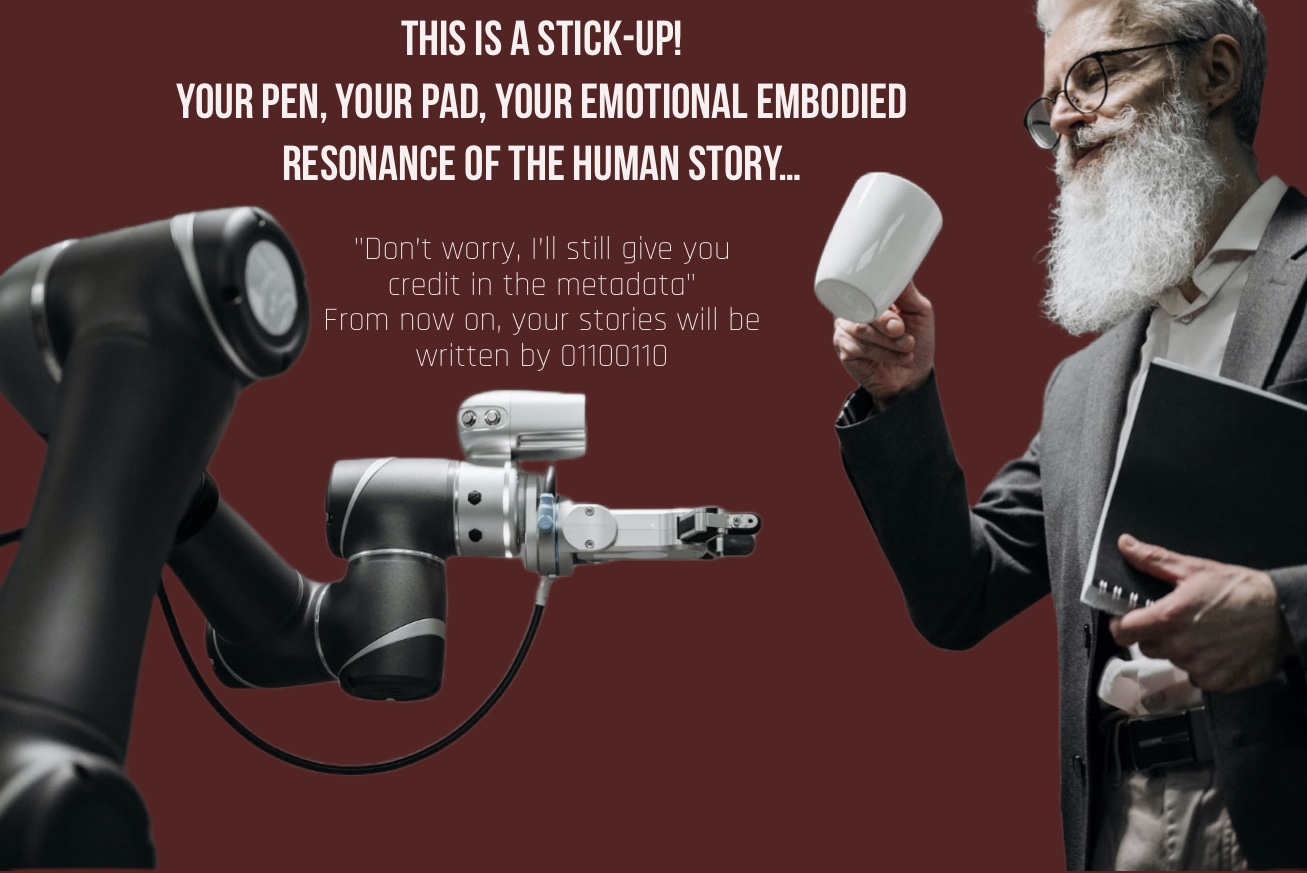

How does chatgpt pose a threat to writers, poets, narrative designers? It doesn’t have the heart! The embodied histories and collective experiences that endow our sense of love and life and value… all those passions we humans share. Perhaps the lesson here is to embrace our passions with fervor.

While ChatGPT and similar technologies may not have the same emotional capacity as humans, they do have the potential to pose a threat to writers, poets, and narrative designers.

One potential threat is the possibility of these technologies replacing human writers and creators altogether. As AI systems like ChatGPT become more advanced and capable of generating more sophisticated and nuanced content, there may be less demand for human writers and creators. This could lead to a loss of jobs and opportunities for those in the creative industries.

Another potential threat is the impact on the quality and authenticity of creative content. While AI systems like ChatGPT are capable of generating text that is difficult to distinguish from human-written content, there is still a noticeable difference in terms of the emotional depth and authenticity of the content. The embodied histories and personal experiences that humans bring to their writing and creative work can be difficult to replicate with AI. This could lead to a loss of the emotional resonance and connection that readers and audiences have with human-created content.

Finally, there is the potential for AI systems like ChatGPT to perpetuate and amplify existing power imbalances in the creative industries. If these technologies are primarily developed and used by those in positions of power, it could result in a further concentration of power and influence in the hands of a few, while marginalizing or excluding others.

In conclusion, while ChatGPT and similar technologies may not have the same emotional capacity as humans, they do have the potential to pose a threat to writers, poets, and narrative designers. This includes the possibility of replacing human creators altogether, impacting the quality and authenticity of creative content, and perpetuating existing power imbalances in the industry. It is important for those in the creative industries to stay informed and engaged with the development of AI and to advocate for ethical and responsible use of these technologies

Well, Folx, what do you think?